This post is by far the silliest post by me but again very useful and handy :)

This one has been the most popular tip among by developers and no indexing/query tuning tip impressed them this much. So whats that brilliant(!) tip ?

On a Query Analyzer/Management Studio (SSMS), If you want to find the properties of the table just select the table name and press ALT + F1. Provides the Columns in the table,constraints,indexes,Identity column details etc. Actually it provides the result of sp_help. Grab a look at the picture provided below.

Just a word of caution. Length column expressed by sp_help is size of a column in bytes.So for a nvarchar column length 100 means that column can hold a max of 50 characters only. If you are used to seeing column properties from design view in Enterprise manager/SSMS then can fall to this trap.Though all of us know about the nchar/nvarchar's 2 byte per character storage its just one of those things which one needs to bear in mind while using this shortcut key.

Other Shortcuts available by default are

* ctrl + 1 -> sp_who

* ctrl + 2 -> sp_lock

You can customize it, as it is available under Tools -> Customize in Query Analyzer and Tools->options in SSMS.Picture provided below.

Note that you can even add queries. I have added a look up on sysprocesses to have a quick look on whats happeining on my server and sp_spaceused for approximate row counts :)

Monday, December 28, 2009

Alt + F1

Monday, December 21, 2009

Table Variable -> Recompilations,Statistics and Parallel Plans

My last post on Table variable vs Temp table ended with comparisons of their performance at different scenarios. This one not essentially a part -3, but will deal with few characteristics of table variable.

By definition, Table variables are never recompiled. Just to provide little intro about Recompilations,Recompilation reloads a query plan into the cache. That is , the current plan prepared for the statement is marked as invalid and a new plan is loaded into the cache and this process is briefly put as recompilation. Recompilations happen when statistics are updated to an object,when the definition of an object changes and a few other scenarios. As stats are never maintained and the structure of a declared table variable can never change, table variables never cause a recompilation. Though Recompilations come at a cost, they are useful in maintaining the correct stats for our objects and hence its a trade off between Recompilation cost vs Updated stats.

Consider the following query:

DECLARE @dt DATETIME

SELECT @dt = Getdate()

DECLARE @tb TABLE(

row_number INT PRIMARY KEY,

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT ,

UNIQUE (supplier_id,row_number ))

INSERT INTO @tb

SELECT Row_number()

OVER(ORDER BY supplier_xid),

supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

SELECT *

FROM @tb

WHERE row_number = 100

SELECT *

FROM @tb

WHERE supplier_id = 1

SELECT Datediff(ms,@dt,Getdate())

The table contains 110k rows.

As you may notice, I am having a table variable, a Primary Key on the column row_number which implies, it would have a clustered index on it and a Unique key on Supplier_id,row_number which will create a non clustered index on it.There is a myth going around saying that you cant have Non clustered indexes on table variable. It is true that you cant directly create NC index but you can do so indirectly by making unique keys. Though, the NC index on table variable is not all that useful, but just wanted to remind that it is possible to create one.ok.Now for the query plan..

As you may notice the highlighted section, which shows a clear differnce between Estimated rows and actual rows.When you use table variable the estimated count is always 1 as it has no stats for judge the number of rows.

In comparison, consider the same code against a temporary table

SET statistics io ON

DECLARE @dt DATETIME

SELECT @dt = Getdate()

CREATE TABLE #tb (

row_number INT PRIMARY KEY,

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT)

CREATE INDEX [CIX_supplier_product_customer] ON #tb (

supplier_id)

WITH (statistics_norecompute = OFF,

sort_in_tempdb = OFF,

ignore_dup_key = OFF,

drop_existing = OFF,

online = OFF,

allow_row_locks = ON,

allow_page_locks = OFF)

ON [PRIMARY]

INSERT INTO #tb

SELECT Row_number()

OVER(ORDER BY supplier_xid),

supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

SELECT *

FROM #tb

WHERE row_number = 100

SELECT *

FROM #tb

WHERE supplier_id = 1

SELECT Datediff(ms,@dt,Getdate())

one small difference. I am creating NC index directly on supplier_id instead of a unique key,as we can directly create a NC index in temp tables. Query plan for the same provided below.

Few things to infer from the query plan. The Actual rows and estimated rows are perfect, as stats are present. Second inference is that Query uses the non clustered index. Table variable doesnt use the NC index created and uses a cluster scan instead. NC index usage would have been effective as the Number of IOs for executing the query 'Select * from #tb where supplier_id = 1' was 38 and the same query against the table variable was 631.

The query 'Select * from #tb where supplier_id = 1' was repeated at 100/1000/10k/100k rows and at all cases NC index on table variable was never used.I observed that NC index created on table var got used only when the NC index covered the query.ie. When the query was changed to 'Select supplier_id,row_number from #tb where supplier_id = 1'. So, the NCIndex on a table variable is not a great idea as the storage engine finds it effective to do a scan most of the times.

On the other hand, the Temp table uses the NC index and provides results at lesser number of IOs.But still, time taken still marginally favours table variables and so whether indexes are getting used or not, table variables win when the queries are fairly simple.

Now for another aspect of table variables:

BOL states that table variable doesn't use parallel plans for updates and deletes.

Table variables don't use parallel plans as effectively as temp tables do.If your 'select' query would gain from Parallel plans then you are better off going for temp tables. Just a example query:

SELECT t1.supplier_id,

t1.product_id,

Count(1)

FROM #tb t1,

#tb t2

WHERE t1.product_id = t2.product_id

AND t1.trans_city = t2.trans_city

AND t1.supplier_id != t2.supplier_id

GROUP BY t1.supplier_id,

t1.product_id

HAVING Count(1) > 100

ORDER BY t1.supplier_id,

t1.product_id

Query plan for the same is provided below.

The query finished in about 5 seconds with a parallel plan . The table had 50,000 rows.

The query plan for the same query when table variable is used is provided below.

Query plan doesnt use a Parallel plan ( Degree of parallelism = 1 ). The query took 16 minutes to complete.5 seconds against 16 minutes makes a lot of difference. So, a Parallel plan for complex queries can provide a huge improvement on performance. So, if the query is likely to use parallel plans and its likely to gain out of it ( there are few exceptions where parallelism can slowdown as well which we will discuss on another day ).

Though the table variable doesnt use stats/parallel plans, for simpler queries with relatively small row counts table variables appear to be a fair choice. For Decision making/Datawarehouse type of applications which involve complex calulations with huge rows the balance shifts sharply towards temp tables.

Monday, December 14, 2009

Error - Invalid length parameter passed to the SUBSTRING function.

This is my 25th post. kind of special. :)

So, I am starting a new cateogory called 'Errors'. Under Errors we will deal with those sudden errors which pop up out of the blue. A bit of ( sometimes a lot )

of investigation clears the air and provides a good story to tell.I have one such story to tell today.

I had one developer who came to my desk and said 'Suddenly my stored procedure is failing with the following error

'Invalid length parameter passed to the SUBSTRING function.'

The developer told me that the Stored procedure was running absolutely fine for the

last 2 years in production and there has been no change on it.( This is somethng all

developers say :) ). True, there was no change on the sp. With bit of debugging, i found that the following query was failing.

SELECT *

FROM dbo.authors_test

WHERE Charindex(' ',city) - 1 > 0

AND auto_id IN (SELECT auto_id from authors_test

WHERE Left(city,Charindex(' ',city) - 1) = 'San')

Its obvious that the query was failing because of the function

left(city,charindex(' ',city) -1 ).Function left(city,charindex(' ',city) -1 ) would get a negative 2nd parameter when there is no space in the column city. So the query would fail when it applies the function on any row which doesnt contain ' ' on the column city. But, however if thats the case then the query should have failed long back and not all of a sudden. And also, the developer claimed that as he has done the checking charindex(' ',city) -1 > 0 on outer query to filter out all the rows without the space are filtered out first.Let us check the actual reason the query failed.

The table had a primary key clustered index in auto_id. No other indexes.

SELECT *

FROM dbo.authors_test

WHERE Charindex(' ',city) - 1 > 0

AND auto_id IN (SELECT auto_id from authors_test

WHERE Left(city,Charindex(' ',city) - 1) = 'San')

When I 1st ran the query the table contained little over 90 records and it failed with the error

'Invalid length parameter passed to the SUBSTRING function.'

To check the claim of the application team that the query ran perfectly few days before,I took backup of the database that was few days old and ran the same query. It worked perfectly. The table contained around 50 odd rows and the query worked fine. When checked with application team on the row count, they replied saying the increase in the row count was normal and the data didnt have any dirty/junk/Null values on city column.

So difference in row counts gave me a bit of clue and I started comparing the query plans of the old and new table. First the old one had a query plan which is shown below.

As you can understand, the query plan works in the following way

Step 1 : filter out the rows using the condition

charindex(' ',city) -1 > 0.

uses a Clustered index scan as there are no useful index to use.

Output out of the Clustered index scan operator is auto_id as it

will be used to join with the sub query.

Step 2 : Use a Nested loop join operator to join with the sub query.

Step 3 : Uses a Clustered index seek operator with auto_id as input from

outer query.Clustered index seek operator uses the Clusered

index to directly jump to the row with auto_id it is interested

in. After finding the row directly it applies the conditon

left(city,charindex(' ',city) -1 ) only against the

auto_id selected.

Now let us look at the same query that fails on the latest database.

The estimated query plan( only estimated plan possible as the query fails and hence full plan is not available) shows two differences.

1) The Clustered index seek operator is replaced by Clustered index scan operator at the inner loop ( sub query ).

2) Nested loop join is replaced by Merge join.

The query plan clearly provides the reason why the query failed. As in the second case the Clustered index scan operator on the subquery would scan all the rows in the table for the condition left(city,charindex(' ',city) -1 ) and then match it with the input auto_id. So , in that case though the sub query will read all the values in the table which means charindex(' ',city) -1 ) > 0 condition

specified at the outer query is in vain. The query took a clustered index scan instead of seek as there were more rows in the table than before. The optimizer swithces from a seek to scan when it feels that the cost of seek( mostly IOs ) would be more than that of a scan. How it decides is a potential post on its own, which I will perhaps cover later.As of now, understand that a switch from seek to scan can occur beacuse of data distribution/missing statistics/fragmented indexes etc.

So, a change in query plan can not only slow down your system, but also cause runtime errors in scenarios like this. This also proves the importance of reindexing, as a seek can become a scan even when a reindexing

job fails.So, lessons learnt are

> Always ensure you read the query plan before you deploy

any code to production

> Try your best to visualize the table load and perform data

validations in a fool proof way.

> Make sure reindex jobs are running at the correctly, so

that the queries pick the correct plan.

Noticed that for such queries SQL 2k5 takes a cluster scan and hence errors are indicated early. But still, as any RDBMS changes query plans with respect to the factors explained above, its good to be wary of such scenarios.

Monday, December 7, 2009

Temp table vs Table Variable - Part ii

Before reading this one,please read my previous post here which speaks about basic differences in temp table and table variable.

As mentioned last week, let me run thro the tests I conducted for understanding the performance of temp table against table variable.

Consider the following code:

DBCC freeproccache

DBCC dropcleanbuffers

SET statistics io ON

DECLARE @dt DATETIME

SELECT @dt = Getdate()

CREATE TABLE #tb (

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT)

INSERT INTO #tb

SELECT DISTINCT supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

CREATE CLUSTERED INDEX [CIX_supplier_product_customer] ON #tb (

supplier_id,

trans_city)

WITH (statistics_norecompute = OFF,

sort_in_tempdb = OFF,

ignore_dup_key = OFF,

drop_existing = OFF,

online = OFF,

allow_row_locks = ON,

allow_page_locks = OFF)

ON [PRIMARY]

SELECT *

FROM #tb

WHERE supplier_id = 1721

AND trans_city = 'Mumbai'

SELECT Datediff(ms,@dt,Getdate())

As you may note, I am insterting a few rows on the temp table. And then I create a clustered index. Fire a straight forward select query.What matters in the code is the section after the insert into the temp table. ie is Creation of the index and the select query.I have noted down a few relevant parmeters for different row counts on the temp table. The query plan obtained is provided below.

Its as expected, a Clustered Index seek which means that the index is getting used.

Let me execute a similar query against table variable. Code posted below.

DBCC freeproccache

DBCC dropcleanbuffers

SET statistics io ON

DECLARE @dt DATETIME

SELECT @dt = Getdate()

DECLARE @tb TABLE(

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT

)

INSERT INTO @tb

SELECT supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

SELECT *

FROM @tb

WHERE supplier_id = 1721

AND trans_city = 'Mumbai'

SELECT Datediff(ms,@dt,Getdate())

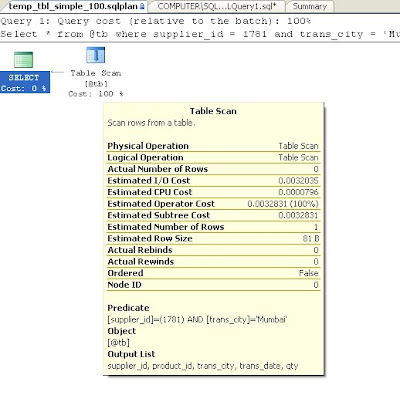

Query plan obtained for the same is provided below. A straight table scan on table variable as there was no index.

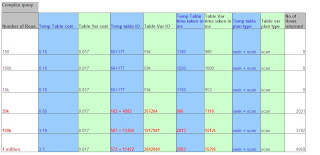

Querycost/IO/time taken for 100/1000/10000/20k/100k rows have been recorded and tabulated below.Please click on the image to view results clearly.

The table compares Query cost, IO, time taken parameters of temp table and table variable.While calulating IOs, one need to take into account the IOs incurred by creating the index as well.So IOs for temp table are represented as 1 + 2, where the number before the '+' sign indicates the IOs incurred by creating the index.

For creating the index, one needs to scan the entire table once. So the above code on using temp table would incur IOs for scanning the table once + IOs for fetching the required row(s). On the other hand, as the query is simple,a table variable can obtain the required row(s) by scanning the table once. The above code using table variable would always incurr the IOs required to scan the entire table once,iresspective of number of in the table.So on IOs front, for a query which as simple as the above one, table variables would always consume less when compared to Indexed temp table.

For time consumed, table variable is faster even at 100k rows. Query cost,which is a number telling you how hard your machine needs to work to execute the query also favours table variables in this context.So when the query is simple and striaghtforward, table variables provide better performance.

Now for the another scenario where a slightly complex query is executed against temp table / table variable.The query attempts to find the city which obtained maximum sales for each supplier.The query against temp table is provided below.

DBCC freeproccache

DBCC dropcleanbuffers

SET statistics io ON

DECLARE @dt DATETIME

SELECT @dt = Getdate()

CREATE TABLE #tb (

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT)

INSERT INTO #tb

SELECT supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

CREATE CLUSTERED INDEX [CIX_supplier_product_customer] ON #tb (

supplier_id,

trans_city)

WITH (statistics_norecompute = OFF,

sort_in_tempdb = OFF,

ignore_dup_key = OFF,

drop_existing = OFF,

online = OFF,

allow_row_locks = ON,

allow_page_locks = OFF)

ON [PRIMARY]

SELECT supplier_id,

trans_city,

Sum(qty)

FROM #tb outer_qry

GROUP BY supplier_id,

trans_city

HAVING Sum(qty) = (SELECT Max(sum_of_qty)

FROM (SELECT Sum(qty) AS sum_of_qty

FROM #tb sub_query

WHERE sub_query.supplier_id = outer_qry.supplier_id

AND sub_query.trans_city = outer_qry.trans_city) x)

SELECT Datediff(ms,@dt,Getdate())

DROP TABLE #tb

The query plan for the same query is provided. The query plan uses a Nested loop join to achieve the resultset.For each supplierId, the clustered index seek is used to fetch the corressponding rows from the inner loop.Clustered index scan is used at outer loop, so that Supplier IDs enter the inner loop in sorted order.So, in

short the clustered index is used effectively.

Similarly the query against table variable is also provided below.

DBCC freeproccache

DBCC dropcleanbuffers

SET statistics io ON

DECLARE @dt DATETIME

SELECT @dt = Getdate()

DECLARE @tb TABLE(

supplier_id INT,

product_id INT,

trans_city VARCHAR(100),

trans_date DATETIME,

qty INT

)

INSERT INTO @tb

SELECT supplier_xid,

product_xid,

trans_city,

trans_date,

qty

FROM supplier_product_customer

SELECT supplier_id,

trans_city,

Sum(qty)

FROM @tb outer_qry

GROUP BY supplier_id,

trans_city

HAVING Sum(qty) = (SELECT Max(sum_of_qty)

FROM (SELECT Sum(qty) sum_of_qty

FROM @tb sub_query

WHERE sub_query.supplier_id = outer_qry.supplier_id

AND sub_query.trans_city = outer_qry.trans_city) x)

SELECT Datediff(ms,@dt,Getdate())

SELECT Getdate()

The query plan is also shown below.Query plan is similar to the one generated for temp table but for few differences.One difference is a table scan at both inner and outer sections of Nested loop operator.Another major difference is additional sort operator required have the rows sorted before passing them to the

inner loop. sort operator can be extremely expensive when run against huge tables.

The results for both the scenarios are tabulated.The results now heavily favour temp tables.Please click on the image to view the results clearly.

On the IOs front temp table always requires lesser IOs as the index proves to be handy. The table variable can only scan and hence the number of IOs increases when the query engine has to read the table multiple times. There is not much of a difference in time taken between the two till 10k rows. But once the

row count reaches 20k the performance of table variable dips badly. Observe the results marked in Red, which show a major performance difference when the table grows bigger. At 1 million rows temp table takes a little less than

3 seconds but table variable takes over 75 seconds which is 25 times slower.

To Summarize, one can go for a table variable when

* Table is really small with a max of 1000 odd rows.

* No complex queries are involved and the table needs

to be read only once/twice.

A temp table is a ideal choice when

* Number of rows are more then 10k

* Queries are complicated and would require multiple passes.

* Query looks like an ideal candidate for indexing.

Anyways, one is strongly encouraged to test with both the options when scenario is not as clear as the examples posted above.

There are few more things to be written. Will do a part-3 as well.