I have always wanted a quick way of Scripting the permissions that have been granted

for an object. I felt it would be handy to get the permission details of an table/sp without going to the enterprise manager. So a small script to do the same.

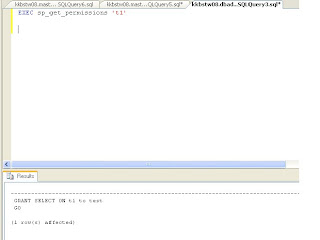

I have wrapped up the script into a SP called 'sp_get_permissions' and uploaded it here.

sp_get_permissions takes object name as parameter and provides the permission script for the specified object. sp_get_permissions also accepts null parameter and provides the permission script for all objects in the database. This would be extremely handy if you are doing deployments between DEV/Staging and Production.

Deploy it in your master database and you can use it from any of your databases.

Sample call:

The sp_get_permission works for both 2000/2005.

Now for the second part of the post..

If you are frequent user of sp_helptext then you would have felt the need

to get the sp/view's create script along with the permissions attached to it.

To do the same I have tweaked the sp_helptext code to make sp_helptext2

which would provide the object's script along with all the permission details.

Download sp_helptext2 from here ,deploy it to your master db and you can use it from any of your databases.

Sample call

Just a small note. These two sps strictly take the object name as the parameter.

So avoid prefixing the object owner name when passing the parameter..ie

EXEC sp_helptext2 'dbo.testProc' doesnt work. But EXEC sp_helptext2 'testProc' does.

Wednesday, October 28, 2009

Getting object permissions

Sunday, October 18, 2009

@@IDENTITY, SCOPE_INDENTITY(), IDENT_CURRENT() - Comparison

Often when one wants to check the last inserted identity value one of the three commands @@IDENTITY, SCOPE_INDENTITY(), IDENT_CURRENT() is used. In this post we will do a comparsion of the three.

@@Identity:

As most you may be aware @@IDENTITY provides the last inserted identity value

for that particular connection. But the thing to note is, it provides you last inserted identity value on the LAST identity table inserted on that connection. Assume, the inserted table A has a trigger inserting into another table B with a identity column. Then @@identity yields identity value of Table B and not table A.

Ident_current() :

Ident_Current('tablename') provides the last inserted identity value across all connections on the table specified. So it cant be used to find the last inserted identity value by your connection.

Scope_Identity(): Scope_identity() is perhaps the most reliable command to find the last inserted identity value on the table by your connection. Provides the last inserted value by the connection not by the underlying trigger.

Couple of things to note:

>If your connection hasnt inserted any rows then @@Identity/Scope_Identity()

returns null.

>Rollback of transaction doesnt reset the identity

Tuesday, October 13, 2009

Transfer logins

Recreate SQL Logins:

Imagine a scenario where you need to uninstall and reinstall

sql server. In such a case you may need to recreate the logins. Assume that

you dont have the password for the sql logins that are to be recreated.

In such a case we have a option of scripting all the logins ( or any )

into a SQL script. SQL Script on execution on the new server will

recreate the logins previously created with the SAME password.

The best thing is the password is not exposed in the script.

This script also works to transfer logins from sql 7 to sql 2000 ,

7 to 2005 and 2000 to 2005.This script and the method is described in detail in Microsoft website.But as this is my blog let me give a brief description of the method.

1) First on the source( old server) create the sps sp_hexadecimal,sp_help_rev_login,

sp_help_revlogin_2000_to_2005( if you are transfering from 2k to 2k5 )

on the master database.

2) On the Query analyzer run

EXEC sp_help_rev_login 'Loginname' --for a single login name

EXEC sp_help_rev_login --for all logins

3) Output will be 'Create Login ...'. Save the output on a .sql file.

Execute the same on the destination server to have the logins created with the same password. Simple. Just a small note.

Have the query analyzer result window in text mode ( ctrl + t) so that

the formating of the output script is not distrubed.

Rest of the details ( and the one repeated here :) ) are given clearly

in microsoft website. Take a look.

For SQL Server 7 and 2000 and for transferring logins from 7 to 2k5 and 2000 to 2005

http://support.microsoft.com/kb/246133

For transferring logins between Sql Server 2005 servers.

http://support.microsoft.com/kb/918992

Sunday, October 11, 2009

Fill Factor - 3

As already mentioned this post will attempt to answer the follwoing question

> While deciding fill factor, What options are we left with when we don't have a good idea of how many inserts are happening?

There are two DMVs which can help us in such a case.

1) sys.dm_db_index_operational_stats

2) sys.dm_db_index_usage_stats

Let us take up the first one - sys.dm_db_index_operational_stats.

Index_operational_stats provides very very valubale info like

> Leaf_insert_count/Leaf_delete_count/Leaf_update_count

> Leaf_allocation_count

As the name suggests the columns Leaf_insert_count,Leaf_delete_count,Leaf_update_count provide

the number of rows inserted,updated,deleted on the index. So when you want to check for the important

table then query for the clustered index of the table as clustered index's leaf level represents

the actual table. It can be done by

SELECT *

FROM sys.dm_db_index_operational_stats

( db_id() , object_id('Supplier_Product_Customer'), 1 , NULL )

WHERE Index_id = 1

Just a small note here. Sometimes the leaf_delete_count can be zero( or less than actual number of deleted rows ) even if deletion had occur ed. In that case one can check leaf_ghost_count which would represent the number of deleted rows. The deleted rows are not physically moved but are marked as deleted ie logical deletion.SqlServer periodically physically removes deleted rows from the disk but not immediately after the deletion.leaf_ghost_count also has number greater than zero when transactions are rolled back.

Leaf_allocation_count indicates the total number of new pages that were allocated to the table.This can relate to the Page splits, if most of your inserts are completely randomized and very little is happening at the tail of the index.

There are quite a few other useful columns row_lock_count,row_lock_wait_in_ms but I will leave them for you to explore.

So, we have all the info that's needed to compute the fill factor. Apply the

Leaf_insert_count,Leaf_delete_count,Leaf_update_count

on the method specified here then you should get your ideal fill factor..right.. But not so easy..Like all wonderful things in life, this one also comes with a drawback. One major drawback is that the details about the table are retained on the

DMV only till the meta data of the table remains in cache. Once the table's meta data moves out of cache, the row containing the useful info is removed from DMV and the values are reset to zero. But how long it will be retained depends on how well your table is getting used and how well other tables are getting used as well.

So, one option you have is to dump the DMV's result periodically into a table and then later use it for analysis. As querying this DMV for a single ( or few ) table doesn't acquire any locks on the actual table,and it is not a resource consuming exercises. But, the analysis can be very tedious because one needs to check whether the table's meta data moved out of the cache or not by comparing the result recorded previous time.For ex: if your number of inserts recorded at 10 AM is less than number of inserts recorded at 9:45 AM then its likely that the table has moved out of the cache between 9:45 and 10. So, analysis can be painful but its the best option available.

Then the Second option we have is sys.dm_db_index_usage_stats .Column of interest is user_updates.There are other interesting columns like user_seeks, user_scans, last_user_seek, last_user_scans but now the one that's needed is user_updates. User_updates column indicates the number of changes that occur ed on the index. In other words,User_updates number represents the total number of insert,update and delete queries that were fired against the table. Point to note

is, its total number of queries and not rows which kind of dents our cause. But it can be useful if you are aware of your queries say something like all your inserts/updates/deletes affect one row or 'x' rows at a time.The good thing is that the DMV retains the value till the next restart of the SQLServer.Still its not a accurate method of measuring as its a cumulative sum of update, delete ,insert queries and doesn't provide the breakup.

After estimating the inserts,updates and deletes and setting the initial fill factor one needs to monitor fragmentation numbers, page count( for checking number of page growth ), page splits and if possible data distribution. Data distribution would help one to check whether the data distribution is skewed and up to what extent.Skewed data can definitely disturb your calculations and make indexes perform

below par. 2k5 doesn't provide a perfect solution for skewed data distribution and its problems.But 2k8 to a certain extent does with Filtered indexes. Will write a piece on that soon.

At last I am kind of done with the series on Fill factors and fragmentation.

Will stay away from this one at least for the next few posts :)

Sunday, October 4, 2009

Identifying Page Splits

I ended my last post on fill factors with following question..

What are the other things that need to be monitored after setting a fill factor?

How do we decide on the fill factor when we are not too sure about the random insert frequency?

But before answering that question, I felt its essential to write about the technique to detect the occurrence of high page splits and directly finding the object having maximum page splits. A table with a high fragmentation value indicates a very very likely chance of it having high page splits. This post will talk about the method to find the object that experienced high page splits within a certain period. I wouldn’t call this post a digression from last topic, but it doesn’t exactly answer the questions with which I ended my last post. But its important for one to know to detect high page splits occurrence this way other than checking it thro fragmentation numbers.

So Whats the starting point..As always in any performance tuning exercise the starting point is Perfmon Counters.I will do a full length post on Perfmon counters later ( may even be a series of posts ) but for the time being I will give a brief 2 line intro of Perfmon, just in case if you are not aware of it. Perfmon is a utility provided along with Microsoft windows, which helps you to monitor various system parameters like CPU/Memory/IO usage/Queue length/ and SQL Server parameters(counters) like Lock waits, Number of transactions,Number of Deadlocks, Number of User connections, Buffer cache hit ratio to name a very few. You can find the list of SQL Server related counters using the DMV/view

Select * from sys.dm_os_performance_counters : if on 2k5

Select * from dbo.sysperfinfo : if you are on 2k

One such counter called Pagespilts/sec under 'SQLServer:Access Methods' object is useful in finding the page splits happening on your server. If you average more than 10 with around( 50-100 active users ) during the day then I really start to worry and perhaps look at options to bring it down. Note the number provided is at server level and its a cumulative figure across all tables and databases. So if you have multiple databases working for multiple applications and each one with different range of users then you are better off monitoring regularly to identify your peak and off peak numbers.

As mentioned the Perfmon counter tells us page splits/sec across server and not which database or table. When a high Page split/sec is noticed the next step would be to find table is most likely responsible for it. For that there is cheeky way of

doing it. That is reading the SQL Server transaction log thro an undocumented function. The function fn_dblog can read a SQL Servers T-Log. It can be used in the following way.

Select * from ::fn_dblog(null,null)

Reading the transaction from the result thrown is bit difficult and cryptic as the function is undocumented and known only to microsoft. But we can make a few intelligent guesses using the columns and values displayed. The following queries can help you identify the object that had high page splits

For 2k:

Select [Object Name], [Index Name], count(1)

from ::fn_dblog(null, null)

Where Operation = N'LOP_DELETE_SPLIT'

Group By [Object Name], [Index Name]

Order By Count(1) desc

The object name would be object you were looking for. Count here refers to the number of rows that were moved because of a split.

For2k5:

Select COUNT(1) AS NumberOfSplits, AllocUnitName , Context

From fn_dblog(NULL,NULL)

Where operation = 'LOP_DELETE_SPLIT'

Group By AllocUnitName, Context

Order by NumberOfSplits desc

This one tells us the number of splits, where AllocUnitName refers to the object name.With these two commands one can find which object is experiencing high page splits. As these commands rely on Transaction log its better you record these values before running Backups against your database or executing a Backup log

So, now we have identified our object with high page splits. But, one can ask why would one need to do this as the Fragmentation Script can detect table with high fragmentation. Consider the following scenario. A table starts after the maintenance with 95% fill factor. Undergoes heavy page splits and becomes 50% full at the middle of maintenance cycle. After that having obtained many inserts it can again become say 90% full before the next maintenance window. So when you record the table fragmentation using the percentage used in fragmentation script it would tell you its 90% full which can mislead a DBA to think that fill factor is working.Fill factor is not doing the best as for more than half the time the table is only half or little over half full. In such a scenario though the internal fragmentation doesn’t indicate the correct picture. Logical fragmentation in the Fragmentation Script should provide hints on problem happening. But anyways, its better to have direct method to monitor high occurrence of page splits as shown above. So, its always important to measure fragmentation levels ( using my script please :) ), pagesplit/sec counter and number of pages on each table.Number of pages on each table can help in identifying the growth of the table/ increase in page count since last maintenance which is very very likely to be due to page splits. When one correlates these parameters, one can clearly confirm the object is involved in high page splits.

So, after confirming the object experiencing high page splits, one can get into the next step of estimating how many random inserts are happening in the table, without having any knowledge about the application.